NVIDIA Message Board older than one year ago

- Nvidia Shield sales are 'great,' production ramping up soon

Sales of Nvidia's Android-powered gaming handheld, the Nvidia Shield, have inspired confidence in the platform's future from Nvidia's leaders, GamesIndustry international reports. Senior Director of Investor Relations Chris Evenden describes sales as "gr...

Senior Director of Investor Relations Chris Evenden describes sales as "great" and says "everything that we shipped so far has sold out ... we're just starting to ramp production."

Despite Nvidia's optimism for the Shield, the company's Q2 reports show a 6.4 percent drop in revenue and a 19 percent decline in net income year-over-year. Nvidia CEO Jen-Hsun Huang says the company expects a "strong second half" of the year due to the Shield launching outside of the U.S. and the introduction of more Tegra 4-powered devices to the market.

- Nvidia Kepler mobile GPU 'Project Logan' supports Unreal Engine 4

Nvidia has unveiled its new mobile graphics processor, built on the same Kepler architecture found in its latest PC graphics cards. Dubbed Project Logan, this new chip supports Unreal Engine 4 and, according to comments from Epic Games CEO Tim Sweeney, ...

Nvidia has unveiled its new mobile graphics processor, built on the same Kepler architecture found in its latest PC graphics cards. Dubbed Project Logan, this new chip supports Unreal Engine 4 and, according to comments from Epic Games CEO Tim Sweeney, will empower mobile devices with "the same high-end graphics hardware capabilities exposed via DirectX 11 on PC games and on next-generation consoles."

Right, it's not just Unreal Engine 4 - Nvidia says Project Logan supports popular graphics standards Open GL 4.4 and Direct X 11, and uses one-third the power consumption of GPUs found in "leading tablets, like the iPad 4." Project Logan runs at 2.2 teraflops, with more raw processing power than can be found in the PS3's GPU.

Above, you can see a video demo of Nvidia's faux-face "Ira" rendered on Project Logan in real-time, and embedded past the break you'll find Nvidia's "Island" demo running on the mobile chip. Project Logan is still in the prototyping phase, so don't expect to see it in the wild anytime soon.

- The Next Generation Of Mobile Graphics Is Here, And It's Amazing.

You may remember Ira, the facial rendering demo Nvidia used to show off the power of the latest desktop graphics technology. He's even more impressive when he's running on Project Logan, Nvidia's Kepler-powered next-generation mobile processor. These are ...

- Nvidia Shield shipping on July 31 to hands near you

Nvidia's Android-powered handheld gaming system Shield will ship July 31, Nvidia announced. The system was originally expected to launch June 27, but shipment was pushed back to July due to a mechanical issue found during the console's QA process.Our han...

Our hands-on time with the Shield found some similarities to the Xbox 360 controller, with a little more bulk than a PlayStation Vita, thanks in no small part to its 5-inch, multi-touch, 720p display.

- Following a last-minute delay that pushed the launch from June to July, today Nvidia confirmed with

Following a last-minute delay that pushed the launch from June to July, today Nvidia confirmed with Kotaku that the Shield handheld is officially launching on July 31. Read more...

- One Day Before Launch, Nvidia's Shield Slips To July

Remember last week, when Nvidia announced their Shield portable Android gaming system was launching tomorrow? Yeah, about that... Citing a mechanical issue discovered during incredibly last-minute QA testing, the PC game-streaming Shield will now be drop...

- Nvidia Shield pushed back to July due to mechanical issue

The Nvidia Shield was supposed to launch this week, but Nvidia has announced that a third-party mechanical issue will be pushing that release back until sometime in July. The exact date is still yet to be determined. "During our final QA process, we disc...

"During our final QA process, we discovered a mechanical issue that relates to a 3rd party component," the Nvidia statement reads. "We want every Shield to be perfect, so we have elected to shift the launch date to July. We'll update you as soon as we have an exact date."

Last week, Nvidia announced it was knocking $50 off the Android-powered handheld device, bringing the Nvidia Shield's price tag down to $300. In May, we visited the Nvidia campus and got our hands on the Nvidia Shield, which sports a 5-inch 720p display, Tegra 4 chip inside and can stream games from your PC - granted you have a GTX 650 GPU or better in your rig.

- The Gainward Nvidia GTX 760, Benchmarked And Reviewed

Nvidia has continued to roll out the GeForce 700 series this week with the GTX 760 — the generation's first truly mainstream product, with pricing well under that of the GTX Titan, 780 and even the 770, which at $400 stillcosts more than the average gam...

- Nvidia's Shield Gets A Price Drop And A June 27 Release

Last month Nvidia's Project Shield Android handheld got a shortened name, a release window of June, and a $349 price tag. With June quickly coming to a close, the Shield is coming in hot on June 27, with $50 knocked off the price to sweeten the deal. Cu...

- The Important Thing Is Gearbox CEO Randy Pitchford Sings In This Video

Something about the Nvidia Shield, or something. Who knows? Here we get a peek into the office and home of Gearbox president and CEO Randy Pitchford, and at 5:08 he plays guitar and sings. He also plays piano and performs magic. So yes, this video is p...

- How Wet Does Tegra 4 Make Dead Trigger 2? So Wet.

Madfinger Games' ambitious follow-up to zombie shooter Dead Trigger is one of the poster games for Nvidia's new Tegra 4 chip, the power behind the upcoming Shield handheld. Today the developer has released a video demonstrating how much moister the game ...

- Interested in Tegra 4 gaming but don't want to drop $350 on a Shield handheld?

Interested in Tegra 4 gaming but don't want to drop $350 on a Shield handheld? Asus' newly-announced Transformer Pad Infinity will be one of the first non-Nvidia devices to feature the world's fastest mobile processor.

- GeForce GTX 770 Review: Adding Value to High-End GFX?

Having taken the covers off the GeForce GTX 780 a week ago, Nvidia is ready to release their next part in the GeForce 700 series. Giving us our first look at the GeForce GTX 770 is Gainward, with their special Phantom edition card featuring an upgraded co...

- Week In Tech: Hands On With Those New Games Consoles

Ha, sorry. Not really. But it got your attention. And there’s a thin tendril of truth in it. It’s been a busy week in hardware and in my mortal hands I hold a laptop containing AMD’s Jaguar cores. The very same cores as found in the freshly minted ...

Ha, sorry. Not really. But it got your attention. And there’s a thin tendril of truth in it. It’s been a busy week in hardware and in my mortal hands I hold a laptop containing AMD’s Jaguar cores. The very same cores as found in the freshly minted games consoles from Microsoft and Sony. So what are they like and what does it mean for PC gaming?

Meanwhile, Nvidia drops a price bomb of the bad kind and Intel has some new chips on the way. Read on for the gruesome details. (more…)

- Nvidia GeForce GTX 780 Review: The Titan Descendant

The GeForce GTX 680 was Nvidia’s first 28nm part featuring 1536 CUDA cores, 128 texture units and 32 ROP units. It's remained since release Nvidia's fastest single GPU graphics card of the series, second only to the dual-GPU GTX 690 which features a pai...

- Nvidia Shield pre-orders open early (as in, right now)

Nvidia canceled its forced wait time to pre-order an Nvidia Shield, allowing anyone to commit to the $350 Android handheld as of now. The Nvidia Shield is available for pre-order directly through Nvidia or through select retailers: GameStop, Micro Center...

The Nvidia Shield - previously known as Project Shield - is a handheld gaming console powered by Android. It has a five-inch retinal multi-touch screen capable of 720p, 16GB of internal storage and can stream your PC games, granted you have a GTX 650 GPU or better in your PC.

- Modern Family Is Already Enjoying Nvidia's Shield

Mere days after the announcement of its $350 price tag and June release date, Nvidia's Shield Android handheld is already showing up in the background of popular television shows. Look what Modern Family's Luke Dunphy has traded in his Nintendo 3DS for. ...

- Nvidia’s Shield Out Next Month For Many Moneydollars

Nvidia’s Shield is technically an Android-based device, but a) what kind of mighty android machine overlord needs a shield and b) we’re a PC gaming website. So then, why am I posting about this rare breed of land-dwelling game clam? Well, because it ...

Nvidia’s Shield is technically an Android-based device, but a) what kind of mighty android machine overlord needs a shield and b) we’re a PC gaming website. So then, why am I posting about this rare breed of land-dwelling game clam? Well, because it flawlessly streams just about any PC game you can throw at it – or at least, it will once that feature leaves beta a couple months after launch. Do you feel like an itsy bitsy screen, infinitely twiddle-able thumbsticks, and the ability to play anywhere in the whole wide worrrrrrrrrrrld (as long as your PC is, er, pretty close by) will greatly enhance your experience? Then stream your eyeballs past the break for details.

(more…)

- Nvidia Shield: Joystiq goes hands-on

The Nvidia Shield arrives next month for $349.99, and yesterday I got to sit down with the final version available at retail. The first thing I noticed was the heft: bulkier than a PS Vita, but no less comfortable. Where the PS Vita sacrificed bigger bu...

The Nvidia Shield arrives next month for $349.99, and yesterday I got to sit down with the final version available at retail. The first thing I noticed was the heft: bulkier than a PS Vita, but no less comfortable.

Where the PS Vita sacrificed bigger buttons for smaller form factor, the Nvidia Shield takes a lot of inspiration from the Xbox 360 controller. In fact, the left and right triggers feel identical to the Xbox 360 and, more or less, so does the d-pad. The one big difference from Microsoft's gamepad is the symmetrical analog sticks.

- Nvidia Shield launches in June for $350, pre-orders open May 20

Nvidia's Project Shield - now officially dubbed Nvidia Shield - will launch before the end of June for $349.99, Nvidia has announced. Pre-orders for the Tegra 4-powered Android handheld will open on May 20, through select online and brick-and-mortar reta...

With the price and pre-order date, Nvidia announced five new games coming to the platform: Broken Age and Costume Quest from Double Fine, Flyhunter: Origins from Steel Wool Games, Skiing Fred from Dedalord Games and Chuck's Challenge from Chuck Sommerville's Niffler, who you may recall of Chip's Challenge fame.

The Nvidia Shield is running Android 4.2.1, sports a 5-inch retinal display capable of 720p and has 16GB of internal storage, expandable through a SD card slot on the back. Other hardware features include a built-in mic and GPS, plus a mini-HDMI out in the back. All Nvidia Shields will include two free games: Sonic the Hedgehog 4: Episode 2 and Expendable: Rearmed.

We'll have a hands-on video with the final Nvidia Shield and some impressions up soon.

- You Can Download Next-Gen Human Faces Right Now

"Ira" is the name given to the bald guy you may have seen lately in some next-gen tech videos from Nvidia and Activision. He's one more step down the road towards more believable artificial characters. If you'd like a little taste of the next-g...

- Week In Tech: Nvidia’s ‘New’ Graphics Cards

Back in Feb we had a little chin wag about the mad dash of annual graphics hardware launches slowing to a saunter. We can add a little more flesh to the bones of that story this week, with some pretty plausible looking details of Nvidia’s upcoming plan...

Back in Feb we had a little chin wag about the mad dash of annual graphics hardware launches slowing to a saunter. We can add a little more flesh to the bones of that story this week, with some pretty plausible looking details of Nvidia’s upcoming plans – and further confirmation of nothing new from AMD. It’s worth a quick dip into the mucky waters of rumour for anyone pondering a GPU upgrade or a generally a new rig as some new kit – of sorts – is imminent. (more…)

- Fiddle With Nvidia’s GraphicsFace Features

It feels like it was only, ooh, 1 month and 24 days since we last risked our collective sanity. We stared into the cold, shark-like eyes of the technological advancement of NvIdIa’S FaceWorks and lived. But at what cost? Back then, we were given a pee...

It feels like it was only, ooh, 1 month and 24 days since we last risked our collective sanity. We stared into the cold, shark-like eyes of the technological advancement of NvIdIa’S FaceWorks and lived. But at what cost? Back then, we were given a peep into the future of GraphicsFace with Digital Ira, a sadly uninteractive demonstration of what gamefaces will be like in the future. It looked impressive, but with the caveat that it was shown on stage and running on a Titan, Nvidia’s mahoosive card of graphics. Well the tech monolith has just released the demonstration for everyone to play with. If you fancy making a high-fidelity head gurn, then your fetish is well catered for.(more…)

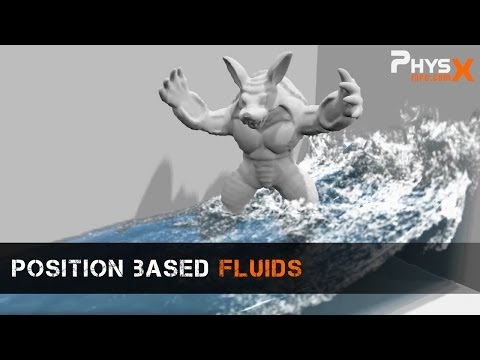

- Making A Splash: Nvidia’s Real-Time Fluid Physics

I feel like I should apologise for the headline but Nvidia call their middleware physics engine PhysX, for crying out loud. ‘Making a splash’ is almost Nabokovian in comparison. You may recall recent advances in convincing/crazed coiffures and I care...

I feel like I should apologise for the headline but Nvidia call their middleware physics engine PhysX, for crying out loud. ‘Making a splash’ is almost Nabokovian in comparison. You may recall recent advances in convincing/crazed coiffures and I care about that about as much as I care about the latest floppy-fringed hair fashions in the real world. Not a jot. Fluid physics though? Ever since the invention of physics, which was sometime just before I balanced bricks on a plank to create a see-saw bridge in Half Life 2, I’ve been waiting for a game with proper water. The latest PhysX tech demo got my juices flowing and you can see it below.

(more…)

- Mind-Blowingly Perfect Water Simulation Is Now A Reality

Simulating the physics of water was always tricky and game engines sometimes still have to use dodgy mechanics to make it feel real. But the above demonstration of this new fluid simulation technique proves that slowly but surely we're getting there. Phy...

- Week in Tech: Intel Overclocking, Bonkers-Wide Screens

Don’t sling your old CPU on eBay just yet. Too many Rumsfeldian known unknowns remain, never mind the unknown unknowns. But the known knowns suggest Intel is bringing back at least a slither of overclocking action to its budget CPUs. It’s arrives wit...

Don’t sling your old CPU on eBay just yet. Too many Rumsfeldian known unknowns remain, never mind the unknown unknowns. But the known knowns suggest Intel is bringing back at least a slither of overclocking action to its budget CPUs. It’s arrives with the incoming and highly imminent Haswell generation of Intel chips and it might help restore a little fun to the budget CPU market, not to mention a little faith in Intel. Next up, local game streaming. Seems like a super idea to me. So, I’d like to know, well, what you’d like to know about streaming. Then I’ll get some answers for you. Meanwhile, game bundles or bagging free games when you buy PC components. Do you care? I’ve also had a play with the latest bonkers-wide 21:9-aspect PC monitors… (more…)

- Gurnaphics Card: NVIDIA’s Face Works

Faces are everywhere in games. NVIDIA noticed this and has been on a 20-year odyssey to make faces more facey and less unfacey (while making boobs less booby, if you’ll remember the elf-lady Dawn). Every few years they push out more facey and less unfa...

Faces are everywhere in games. NVIDIA noticed this and has been on a 20-year odyssey to make faces more facey and less unfacey (while making boobs less booby, if you’ll remember the elf-lady Dawn). Every few years they push out more facey and less unfacey face tech and make it gurn for our fetishistic graphicsface pleasure. Last night at NVIDIA’s GPU Technology Conference, NVIDIA founder Jen-Hsun Huang showed off Face Works, the latest iteration. Want to see how less unfacey games faces can be?

(more…)

- PSA: Tomb Raider gets another patch, Nvidia updates beta drivers

Tomb Raider on PC got another patch this weekend, launched in conjunction with new beta drivers from Nvidia for GeForce GPUs. Download the latest GeForce 314.21 drivers direct from Nvidia.Since its launch, GeForce Tomb Raider players have seen "major per...

Since its launch, GeForce Tomb Raider players have seen "major performance and stability issues" trying to play the game at max settings. Complaints include problems with TressFX and tessellation tripping the game up, at least for those with 600-series cards.

There are more Tomb Raider patches incoming from Crystal Dynamics, based on ongoing player feedback.

- Tomb Raider Performance Test: Graphics and CPUs

#tombraider Although this year's Tomb Raider reboot made our latest list of most anticipated PC games, I must admit that it was one of the games I was least looking forward to from a performance perspective...

- NVidia Apologises For Crummy Tomb Raider Performance

NVidia have written a little apology note to all suffering with Tomb Raider graphics issues. Although I’ve yet to receive chocolates. I mentioned in yesterday’s Tomb Raider review that I had some issues with running the game on prettier graphics, and...

NVidia have written a little apology note to all suffering with Tomb Raider graphics issues. Although I’ve yet to receive chocolates. I mentioned in yesterday’s Tomb Raider review that I had some issues with running the game on prettier graphics, and it seems I’m not alone. Apart from the silly hair mode reducing NVidia cards to jelly, I had peculiar problems with the OSD occasionally causing the game to judder, and couldn’t play above the normal settings. Extraordinarily, as spotted by Joystiq, this is because for some reason NVidia didn’t receive final code of the game until the weekend before release, so didn’t have a chance to create an update to accommodate it all.

(more…)